By Chris Grayson

In 2012, Google unveiled Google Glass and within a year made the proprietary smartglass available to consumers under their Glass Explorer program. With much fanfare, a partnership was launched with Diane von Furstenberg, and the device even starred in a multipage pictorial for Vogue.

Despite some initial excitement among some consumers and eyecare practitioners, Google Glass had issues from the start. Its futuristic, geeky look was off-putting to many consumers, its built-in video camera seemed intrusive to those concerned about privacy, and it had a high price point.

Ultimately, Google withdrew Glass from the consumer market. But it quickly found new life as an “enterprise” product, marketed for warehousing, logistics, manufacturing plants, field workers in the power and energy sector, as well as first responders.

The fact that Glass failed to click with consumers has led some in the optical industry to write off the whole category of consumer smartglasses. But that judgement may be premature. The next wave of smartglasses is coming, and optical retailers and eyecare professionals may want to reconsider the product category.

Several innovations have recently taken place, most notably breakthroughs in near-eye optics that will enable display systems to assume the form factor of a more traditional lens, and even embed these displays within a prescription lens. Combine these innovations with component miniaturization as well as incremental improvements in power consumption, and we’re closing in on an inflection point where consumer smartglasses will be coming to market that are close to, if not indistinguishable in form factor from traditional fashion eyewear frames.

In this article, we will explore both the latest technological advancements, as well as the juggernaut: Apple’s imminent entry into the smartglasses market.

Since Google pulled Glass from the consumer market, the space has been focused on single-use case models: audio glasses such as Bose Frames, or smaller players such as Zungle and Mystic. For the sports cycling market, Kopin introduced Solos and competitor Everysight of Israel introduced Raptor. These cycling glasses provide both navigation and basic health monitoring.

Perhaps second only to heads-up and hands-free display and user interaction, health tracking will be a core application of smartglasses in the consumer market.

Last year, managed vision care powerhouse VSP introduced Level glasses, with many of the features of wrist-worn wearables like Fitbit, in a smartglasses form factor. Smaller players like Jins Meme of Japan also have frames on the market that compete in this space.

The real breakthroughs in health monitoring will come when eye-tracking/pupil tracking is fully incorporated—though this is likely a couple of generations away in consumer products. While eye-tracking has existing user interface applications, in the realm of healthcare it will allow for real-time tracking beyond any capability of a wrist worn device.

In a paper published last year in the Nature journal Biomedical Engineering, scientists from Verily (a Google subsidiary) demonstrated how artificial intelligence analysis could be applied to eye-tracking to identify heart disease. Roy Chuck, MD, PhD, of the Department of Ophthalmology and Visual Sciences at the Albert Einstein College of Medicine has also demonstrated that diabetes can be detected early via eye-tracking. There is also Dr. Melodie Vidal’s research at Lancaster University, which demonstrates the ability to recognize certain kinds of autism, some kinds of schizophrenia and even early onset Alzheimer’s disease via eye-tracking. She has since joined North, maker of Focals smartglasses, as director of human-computer interactions.

This leads us to a patent filing disclosed by the U.S. Patent Office in April of this year that revealed an Apple smartglasses design with an array of sensors including head-positioning, jawbone movement, photoplethysmography sensors, various photodiodes and optical sensors, electrocardiography sensors, galvanic skin response sensors, and others that track posture and stress levels.

While a patent filing does not ensure these features will appear in a commercialized Apple smartglass, it is a clear indication that Apple will be making health monitoring a central feature of their smartglasses strategy. Their Apple Watch Series 4 now features their ECG app, and is capable of generating an ECG “similar to a single-lead electrocardiogram.”

WHY NOW?

Apple is not a first mover. Apple lets everyone else make the mistakes, then learns from them and moves into the market.

There were plenty of mp3 players before the iPod… but few remember them. As early as 1999, several PC makers introduced devices based upon Microsoft’s specification for a “Tablet PC.” Few remember these early failures, since Apple introduced the iPad. The same can be said of market leaders like Nokia or Blackberry with the introduction of the iPhone.

While some may note that Apple only commands 19 percent of the global smartphone market, they capture a staggering 87 percent of global smartphone profits—according to Forbes/Canaccord Genuity’s latest market data.

When Apple enters a market, they tend to dominate. Perhaps the most applicable corollary would be Apple’s move into smartwatches.

When Apple unveiled the Apple Watch in September 2014, many in the Swiss watch industry scoffed. By Q1 2018, Apple sold more Apple Watches than the entire Swiss watch industry combined.

AN OPPORTUNITY FOR ECPS

Since the launch of the Apple Watch, the company has been making a strategic move into not just wearable devices, but an integrated system of body-worn technology. In December 2016, they followed with wireless AirPods. With an Apple Watch, AirPods and Apple smartglasses, there would rarely be a need to take out an iPhone at all.

In 2017, Bloomberg’s Apple analyst Mark Gurman said that his sources indicated Apple would introduce a pair of smartglasses by 2020. In March of this year, notable Taiwanese Apple analyst Ming-Chi Kuo stated that Apple could begin production on their smartglasses by as soon as late 2019, for a 2020 product launch. In typical Apple fashion, the leaks and rumors keep coming. In late April, Guilherme Rambo of 9to5Mac added to the hype by publishing a “leak” that Apple’s June Worldwide Developer Conference will unveil support for “stereo AR headsets” (smartglasses) in the newest upgrade to their ARkit developer software. All these signals indicate that Apple smartglasses are imminent.

Apple’s retail strategy will need to integrate eyecare professionals, but could also be disruptive to optical retailers as they control their own sales channel via their network of Apple Stores.

As other tech industry players respond to Apple’s entry into the market, significant opportunities could present themselves to ECPs who have their eyes open. They are ideally positioned to capitalize on these trends operating at the junction of retail and health, and because of the personalized nature of the eyeglasses.

Is your business or practice ready? ■

MORE THAN A PRETTY FACE

The next generation of smartglasses will be more than just an aesthetic upgrade, they will also be a generational leap from the Google Glass variety of devices in both functionality and user interface. The display in Glass produces the illusion of an oversized flat screen floating in the wearer’s peripheral vision and has a touch-sensitive bar on the adjacent temple. It is a rudimentary display, and the user input is rather basic.

Next-generation smartglasses have a discrete display integrated directly into a prescription lens, some are “stereoscopic,” which is to say the content is three dimensional, inserted into the wearer’s view of the real world. This is known as augmented reality.

Next generation smartglasses also have audio input with AI smart assistant integration—be that Alexa, Cortana, Siri or other—your preferred assistant is at your beck and call.

The most advanced smartglasses have depth sensors. Some sensors map the user’s surroundings, giving the device a three-dimensional understanding of the environment. Other sensors can track hand gestures for input. Enterprise and developer products like Microsoft HoloLens and MagicLeap One are already available to purchase, but not yet in a form factor that is viable for a consumer product… but it is coming.

The first step toward consumer viable smartglasses will come with discrete display systems. Focals by North already have a display integrated into a prescription lens.

NEAR-EYE OPTICS DISPLAY BREAKTHROUGHS

The dominant display technologies at the forefront of this revolution in consumer form-factor smartglasses include “waveguides” and “laser to holographic combiners.”

Waveguides themselves can be divided into holographic waveguides and surface relief waveguides (and surface relief further into additive and subtractive manufactured designs).

WAVEGUIDES

Waveguides are an adaptation of technology first patented in 1970 by researchers at Corning Glass, to enable multiple data-streams to flow through a fiberoptic cable. While fiberoptic cables are an extruded cylinder, waveguide technology can also transfer light through a plane.

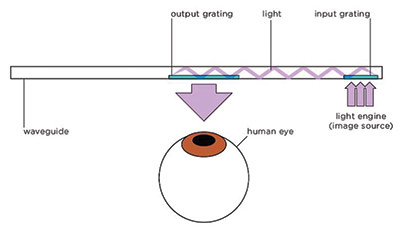

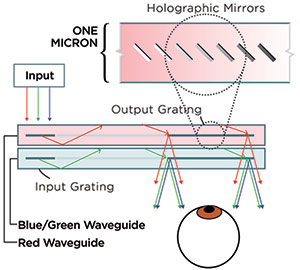

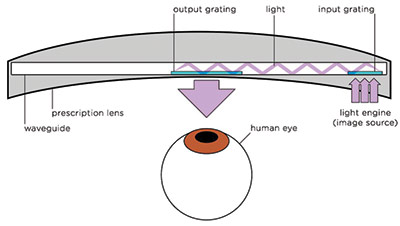

While light can flow through a plane, in order to make a planar waveguides useful as a near-eye display technology, they need optical elements to get an image into one end of the waveguide, and then more optics to turn the image in front of the eye. These optics are referred to as an “input grating” and an “output grating.”

For these kinds of waveguides to be practical in use, it also required the development of complementary technology—miniaturized micro-displays to shine an image into the input grating end of the optical system, known as a “light engine.”

By the 1990s, engineers including those at Sony were experimenting with planar waveguides as a means of placing an image from a micro-display in front of the eye. Even the earliest holographic waveguide displays first appeared back in the ’90s.

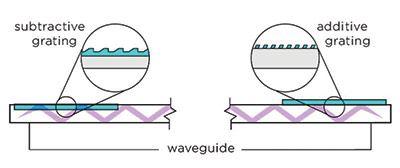

In the 2000s, Nokia invented a waveguide display that employed micro-optical elements engraved into the surface of a plane to facilitate the input and output gratings. This IP would eventually land in the hands of Microsoft, through their Nokia acquisition. These lenses became known as subtractive-manufactured surface relief waveguides.

Soon others, most notably the heavily venture funded MagicLeap (through their own acquisition of Molecular Imprints) employed a manufacturing process for input and output gratings on a surface relief waveguide using an additive method known as nano-lithography: the optical elements are “printed” at a molecular scale onto the surface of the plane.

Still other companies focused on “holographic” optical elements for the input and output gratings, most notably DigiLens. The holographic waveguides use a technique not dissimilar to the eagle security hologram featured on most credit cards. Holograms like those on a credit card are exposed into a transparent substrate, which is then mounted onto a reflective surface, so that ambient light can reflect back through the hologram (which must be backlit) to create the image the cardholder sees.

Now imagine—instead of an eagle—a hologram of columns of optical elements that behave like mirrors. These holographic mirrors allow for input and output gratings of only a few microns in thickness. Further, DigiLens has perfected a method of manufacturing their waveguides from a liquid crystal based polymer, making these holographic optical elements electro-active—also known as switchable bragg gratings.

LASER TO HOLOGRAPHIC COMBINER:

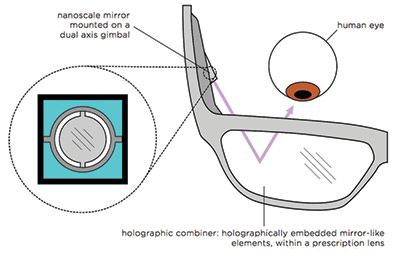

Pico-laser-based near-eye displays were pioneered by Microvision. With this kind of display system, a laser is bounced off a micro-mirror, mounted on a dual-axis gimbal. Early versions of these Microvision displays simply used a beam-splitter, otherwise known as a two-way mirror to combine the view of the real world with the view of virtual content. Over time, a more sophisticated optical combiner was developed, similar to the holographic waveguide. A series of micro-mirror-like holographic elements could be embedded inside a lens and the laser targeted at them, to reflect into the user’s eye. These laser displays—in their current form—have one distinct shortcoming compared to waveguides: a very narrow field of view (that being the width of the user’s view that can be augmented with virtual content). But as a competitor to waveguides, they also have a tremendous lens-crafters like Interglass of Switzerland, or Canadian consumer smartglasses brand, North, have shown that these kind of laser-based displays can be embedded within a traditional prescription lens.

North also has a patent to embed a waveguide within a prescription lens. Interglass says they’re also working on a waveguide within a prescription lens, and DigiLens have IP around a curved waveguide applied to the surface of a prescription lens. A representative from Interglass has suggested that a holographic waveguide embedded within a prescription lens should be expected in time for the Consumer Electronics Show in January 2020.

The waveguide display also requires a “light engine,” or micro-display to project into its input grating—the image source. These are also miniaturizing, getting brighter and falling in power consumption.

In future generations, expect lenses to combine displays with tunable focus lenses.

Many researchers, including Nazmul Hasan at the University of Utah working under Professor Carlos Mastrangelo have been developing tunable focused lenses. Used in combination with a depth sensor, the lenses change their focus from near to far, based on where the wearer is looking, or can also change with the wearer’s prescription over time.

In an interview with Smithsonian, Mastrangelo explains, “This means that as the person’s prescription changes, the lenses can also compensate for that, and there is no need to buy another set for quite a long time.”

Perhaps the most intriguing development in the tunable focus lenses space is the partnership between two Israeli companies: Lumus optics, maker of waveguide-based near-eye optics display systems, has partnered with tunable focus lens manufacturer DeepOptics, to develop hybrid tunable focus lenses with an embedded display system.

–CG

APPLE OF YOUR EYES

Apple is notoriously secretive, but through a combination of acquisitions, contracts, patent filings, key hires and occasional hints, we can see Apple’s display system taking form: In 2017, Apple poached waveguide engineer Michael Simmonds from BAE Systems’ military optics team and in the same month made a $200 million investment in Corning Glass for development of display technology. By September, Corning showcased their concept for waveguide display systems in a publicly released video. Corning Glass is also the material specification and supplier for other waveguide display manufactures including Wave Optics and DigiLens. Any pretense on the part of Apple to hide a smartglasses product in development was dropped completely when it was revealed that they had acquired Colorado-based holographic waveguide manufacturer Akonia Holographics. Add to this their acquisition of MicroLED manufacturer LuxVue and a more recently revealed manufacturing contract with TSMC to tool a manufacturing plant for MicroLED light engines, and we can see the form of Apple’s entire optics module taking shape.

Apple smartglasses are coming—and they’re coming soon.

–CG

Chris Grayson is a tech expert who writes about smartglasses and near-eye optics display systems, conducts research for investors, consults for tech companies and blogs at Giganti.Co.